Live Job Update with Pulsar

Description of The Problem

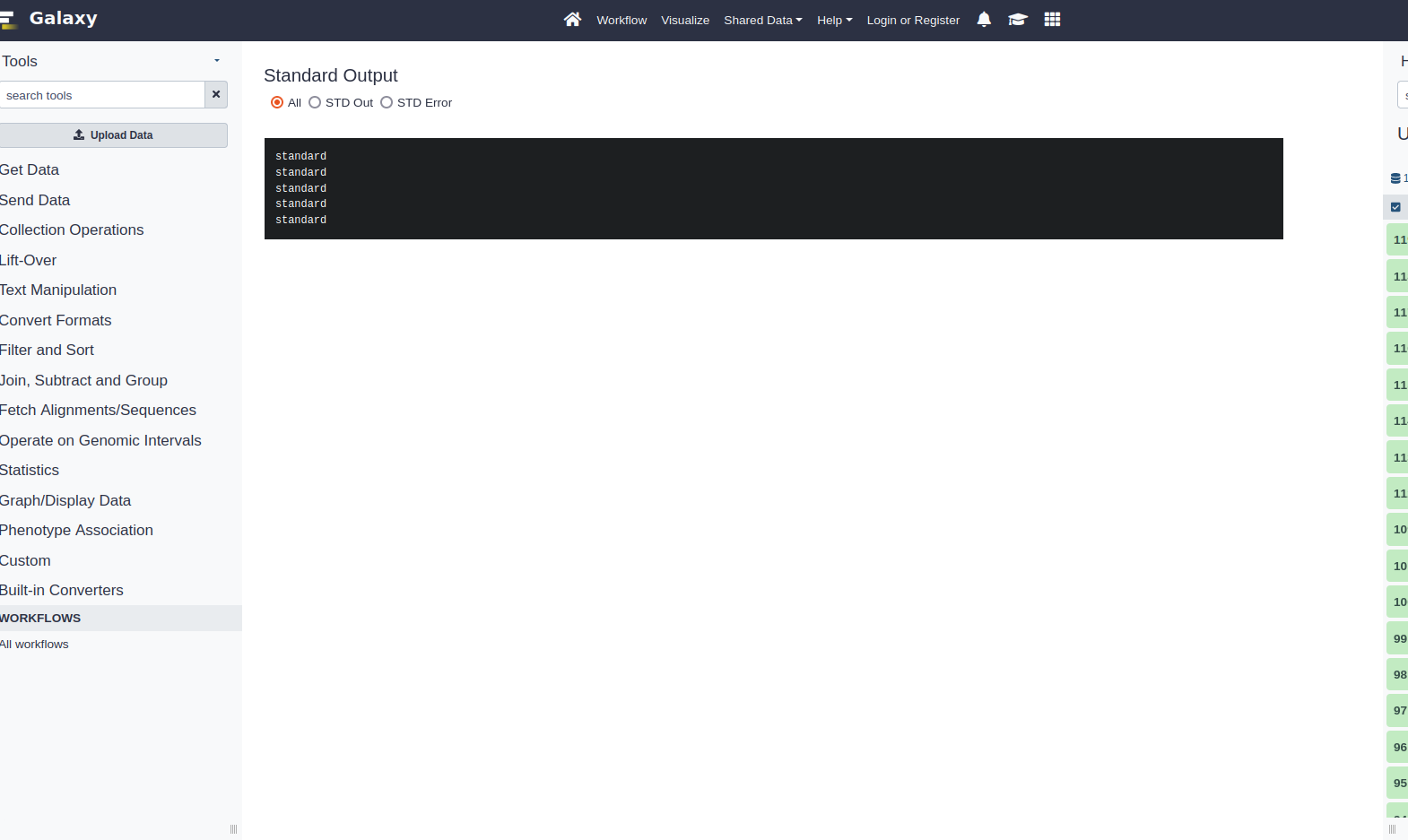

When running a job in Galaxy, users have limited access in what they can view. By clicking the "Eye" icon in a job listing in the job queue, they can access the output of a job, however this output view is a) not updated unless they click on the "eye" icon again or refresh the page and b) this output is only the job output. Job output refers only to output files created by whatever software a job is running and specified in the appropriate tool description. After a job is finished users can then access extra information like standard out and standard error that was produced while the job is running. There is currently no way to access this information while the job is running, despite it being produced at that time. While, users can work around this by routing the standard out to an output file, and repeatedly clicking the eye icon, it would be a major improvement to have a view that allowed users to see standard output and error that updated as their jobs are running.

Current Blockers

This issue has been raised in the Galaxy community before, but was shelved due to concerns of a) how long this feature would take to implement, b) difficulties associated with how Galaxy currently handles standard out and error, and c) whether any practical solution could perfectly cover all use cases (see here).

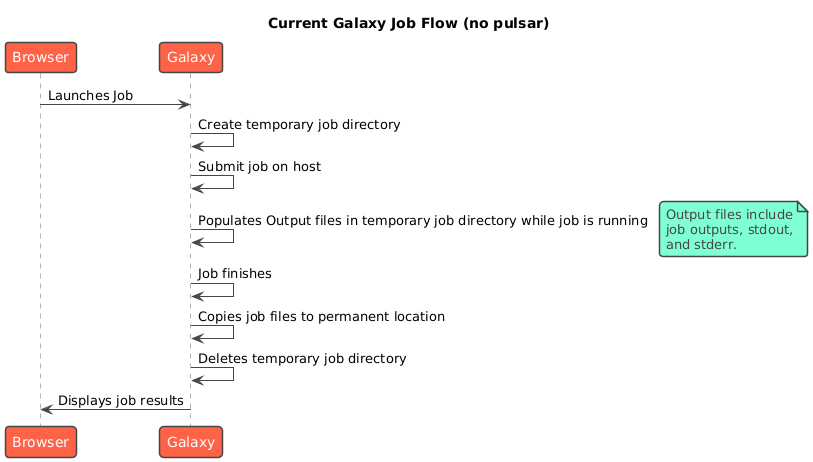

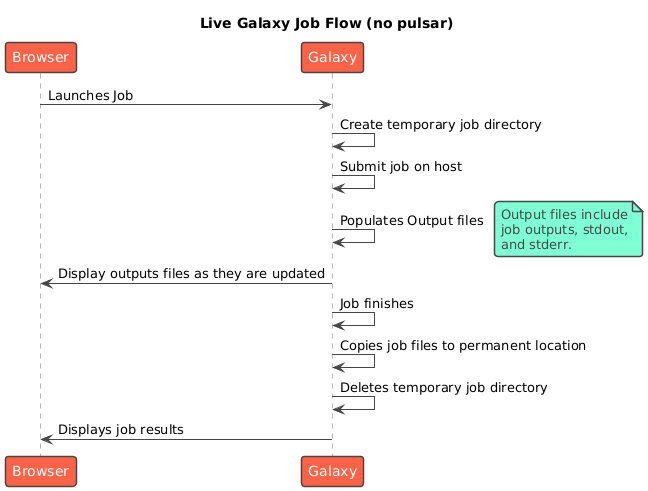

The first issue will be discussed more later, but let's go into depth on the latter two. Currently, when Galaxy runs a job (let's assume a local job runner) it follows this workflow:

With this workflow it seems pretty straightforward to implement. Simply, create a page that renders the standard out/error files in real time.

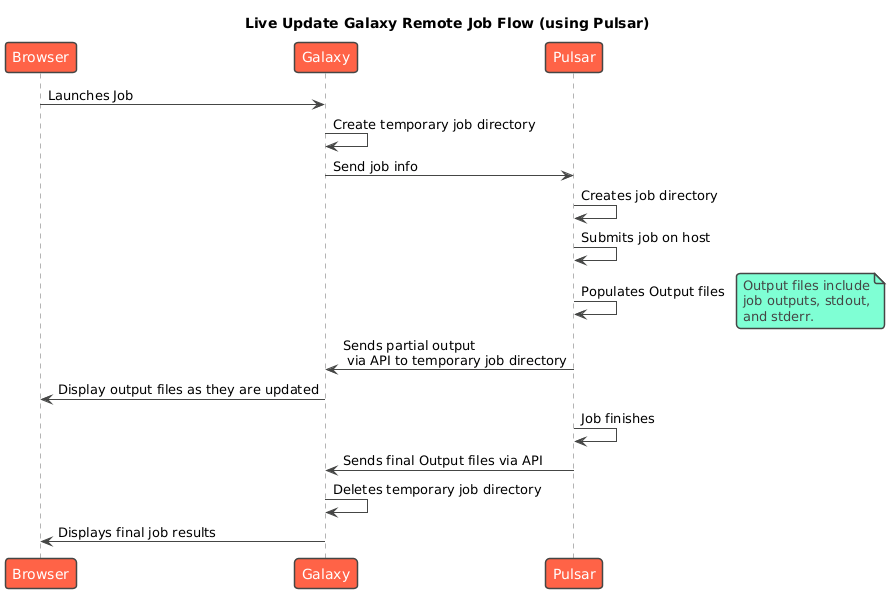

Unfortunately it is not realistic for Galaxy deployments to use local job runners, instead Galaxy can and should leverage external compute to run its jobs, using tools like Pulsar. Each of these tools would be need to modified to send back to Galaxy the standard output and error while they are running jobs. For instance we have already shown that Pulsar can be modified to do this:

One of the biggest hurdles here is the size of the standard output/error files. On large jobs, efforts would have to be made to keep these updates small as to not overwhelm the Galaxy server if, say, several jobs were running at once.

Another hurdle would be the fact that not every job runner functions like Pulsar. There are not guaranteed to have a way to send back updates regularly. Take this snippet of code from the BaseJobRunner class.

def _finish_or_resubmit_job(self, job_state: "JobState", job_stdout, job_stderr, job_id=None, external_job_id=None):

job_wrapper = job_state.job_wrapper

try:

job = job_state.job_wrapper.get_job()

if job_id is None:

job_id = job.get_id_tag()

if external_job_id is None:

external_job_id = job.get_job_runner_external_id()

exit_code = job_state.read_exit_code()

outputs_directory = os.path.join(job_wrapper.working_directory, "outputs")

if not os.path.exists(outputs_directory):

outputs_directory = job_wrapper.working_directory

tool_stdout_path = os.path.join(outputs_directory, "tool_stdout")

tool_stderr_path = os.path.join(outputs_directory, "tool_stderr")

# TODO: These might not exist for running jobs at the upgrade to 19.XX, remove that

# assumption in 20.XX.

if os.path.exists(tool_stdout_path):

with open(tool_stdout_path, "rb") as stdout_file:

tool_stdout = self._job_io_for_db(stdout_file)

A quick look at some of the non-pulsar jb runners confirms that not every job runner necessairly handles stdout in a usable manner (see slurm.py univa.py, etc).

If we wanted to make universal solution we would have to modify the base job runner class to force job runners to provide updates. Then we would have deal with updating all the older job runners and forcing other people to update their own custom job runners.

A Solution

The solution I think makes the most sense is to implement a solution for the job runners that we personally use and have a way to indicate whether or not a job has live updates or not. Basically, if a job is run using Pulsar or a local job runner, then have a page that updates with the contents of standard out/error. If the size of these files is too large or the job runner doesn't support sending updates, then have the page display a message telling the user the issue.

The issues with this solution is that I'm not sure that this would be an acceptable solution to the broader Galaxy community. We might have to be willing to maintain this feature for our internal use only. The second issue would be the scalability. Even with limiting the amount of standard output/error data sent for each job, it's possible that with a large amount of large jobs that this limit might not allow this page to be updated with the frequency such that this feature would be useful to users.

Tasks and time estimates

Note, these tasks are not in any particular order.

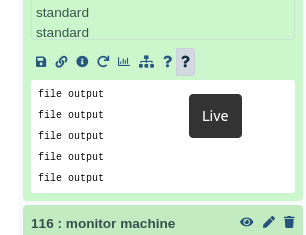

Create a new page that displays stdout file.

This task would consist of creating the actual UI for this feature. We would need to add proper navigation to

and from this page. The page would essentially have to poll the job directory for stdout/error files and display

them in a logical manner. Screenshots are just mocked HTML for a general idea.

This task would consist of creating the actual UI for this feature. We would need to add proper navigation to

and from this page. The page would essentially have to poll the job directory for stdout/error files and display

them in a logical manner. Screenshots are just mocked HTML for a general idea.

Estimate 3-4 days

Update Pulsar to only stdout update (including 10b limit, and controllable update intervals).

This feature has already been developed somewhat. The only reason I'm estimating two days for the data limit modification is because we were thinking about letting Galaxy manage the update interval inside of the job description and that feature was proving hard to implement.

Estimate: 2 days

Write tests in Pulsar.

This would be writing tests for any new features in Pulsar.

Estimate: 1-2 days

Update Galaxy architecture to get standard out from job runner while it is running.

The estimate for this feature is longer because of the potentially needing to rewrite some of the Galaxy job runner code, and the push-back that we might receive from tne broader Galaxy community. If we just wanted to limit the scope to just Pulsar jobs, then this estimate would decrease. Estimate: 2-3 weeks.

Create testing for new features added.

This would include UI tests, unit testing for any Galaxy backend changes, and any integration tests that would be need to be fixed or added. This task is pretty broad, since I'm not entirely sure how much work would need to be done here.

Estimate 4-5 days

Overall estimate (including some leeway for back and forth with Galaxy dev team):

My estimates are based off of my initial thoughts on these issues, as well as how long some of the past tasks have taken me in particular.

Estimate: 5-6 weeks.